Like "service" and before that "object", architecture was borrowed from the tangible context of tangible construction and applied to intangible information systems without defining its new meaning in this foreign digital context. This blog returns the term to its historical context in order to mine that context for lessons that might apply to information systems. The (notional) historical context I'll be using is outlined by the following terms:

- Real-brick architecture is the modern approach to construction. It leverages trusted building materials (bricks, steel beams, etc) that are not available directly from nature. In other words, real-brick components are usually not available for free. They are commercial items provided by other members of society in exchange for a fee. The important word in that definition is trusted, which is ultimately based on past experience with that component, scientific testing and certification as fit for use. This is an elaborately collaborative approach that seems to be a uniquely human discovery, especially in its reliance on economic exchange for collaborative work.

- Mud-brick architecture is the primitive approach. Building materials (bricks) are made by each construction crew from raw materials (mud) found on or near the building site. Although the materials are free and might or might not be good enough, mud brick architecture is almost obsolete today because mud bricks can not be trusted by their stakeholders. Their properties depend entirely on whoever made them and the quality of the raw materials from that specific construction site. Only the brick makers have this knowledge. Without testing and certification, the home owner, their mortgage broker, the safety inspector, and future buyers have no way of knowing if those mud bricks are really safe.

- Pre-brick architecture is the pre-historic (cave man) approach. This stage predates the use of components in construction at all. Construction begins with some monolithic whole (a mountain for example), then living space is created by removing whatever isn't needed. This context is actually still quite important today. Java software is still built by piling up jar files into a monolithic class path and letting the class loader remove whatever isn't needed. Only newer modularity technologies like OSGI break from this mold.

The pre-brick example mainly relies on such subtractive approaches but additive approaches were used too. For example, mud and wattle construction involves daubing mud onto a wicker frame. I apologize to advocates of green architecture where pre-modern construction is enjoying a well-deserved resurgence. I chose these terms to evoke an evolution in construction techniques that the software industry could do well to emulate, not to disparage green architectural techniques (post-brick?) in any way.

Federal Enterprise Architecture and DoDAF

So how does this relate to the enterprise architecture movement in government or DoDAF in particular? The interest in these terms stems from congressional alarm at the ever-growing encroachment of information technology expenses on the national budget and disappointing returns from such investments. These and other triggering factors (like the Enron debacle) led to the Clinger-Cohen act and other measures designed to give congress and other stakeholders a line of sight into how the money was spent and how much performance improved as a result. The Office of Management and Budget (OMB) is now responsible for ensuring that all projects (>$1M) provide this line of sight. The Federal Enterprise Architecture (FEA) is one of their main tools for doing this government-wide. The Department of Defense Architecture Framework (DoDAF) is a closely related tool largely used by DOD.

I won't summarize this further because that is readily available at the above link. My goal here is to focus attention on what is missing from these initiatives. Their focus is on providing a framework for describing the processes a project manager will follow to achieve the performance objectives that congress expects from providing the money to fund the project. To return to the home construction example, congress is the mortgage broker with money that agencies compete for in budget proposals. Government agencies are the aspiring home owners who need that money to build better digital living spaces that might improve their productivity or deliver similar benefits of interest to congress. Each agency hires an architect to prepare an architecture in accord with the FEA guidelines. Such architectures specify what, not how. They provide a sufficiently detailed floor plan that congress can determine the benefits expected (number of rooms, etc), the cost, and the performance improvements that might result. They also provide assurances that approved building processes will be followed, typically SDLC (the much-maligned "waterfall" model). What's missing is after the jump.

What's Missing? Trusted Components

What's missing is the millennia of experience embodied in the distinction between real-brick, mud-brick, and pre-brick architectures. All of them could meet the same functional requirements; the same floor plan (benefits) and performance improvements. The differences are in non-functional requirements such as security (will the walls hold up over time?) and interoperability (do they offer standard interfaces for roads, power, sewer, etc). Any home-buyer knows that non-functional requirements are not "mere implementation details" that can be left to the builder to decide after the papers are all signed. That kind of "how" is the vast differences between a modern home and a mud-brick or mud and wattle hut, which are obviously of great interest to the buyer and their financial backers. This difference is what is omitted during the closing decision by the "what not how" orientation of the FEA process.

So let's turn to some specific examples of what is needed to adopt real-brick architectures for large government projects. It turns out that all the ingredients are available in various places, but have not yet been integrated into a coherent approach:

- Multigranular Cooperative Practices: This is my obligatory warning against SOA blindness, the belief that SOA is the only level of granularity that we need. But just as cities are made of houses, houses are made of bricks, and bricks are made of clay and sand, enterprise systems require many levels of granularity too. Although SOA standards are fine for inter-city granularity, there is no consensus on how or even whether to support inter-brick granularity; techniques for composing SOA services from anything larger what than Java class libraries support. The only such effort I know of is OSGI but this seems to have had almost no impact in DOD.

- Consensus Standards: although much work remains, this is the strongest leg we have to stand on as broad-based consensus standards are the foundation for all else. However standards alone are necessary, not sufficient. Notice that pre-brick architecture is architecture without standards. Mud-brick architectures are based on standards (standard brick sizes, for example), but minus "trust" (testing, certification, etc). Real brick architectures involve wrestling both standards and trust to the ground.

- Competing Trusted Implementations: The main gaps today are in this area so I'll expand on these below:

- Building Codes and Practices: bricks alone are necessary but insufficient. Building codes specify approved practices for assembling bricks to make buildings. This is almost virgin territory today for an industry that is still struggling to define standard bricks.

- Construction Patterns and Beyond: This alludes to Christopher Alexander's work on patterns in architecture and to the widespread adoption of the phrase in software engineering. It has since surfaced as a key concept in DOD's Technical Reference Model (TRM) which has adopted Gartner Group's term, "bricks and patterns". However, this emphasizes the difficulty of transitioning from pre-brick to real-brick architectures. Gartner uses "brick" to mean a SOA service that can be reused to build other services or a standard. That is not all all how I use that term. A brick is a concrete sub-SOA component that can be composed with related components to create a secure SOA service, just as modern houses are composed of bricks. True, standards help by specifing the necessary interfaces, but they are never confused with bricks which only implement or comply with that standard. Standards are abstract; bricks are concrete. They exist in entirely different worlds; one mental, the other physical.

Implementations of consensus standards is rarely a problem; the problem is that they're either not competing or not trusted. For example, SOA security is a requirement of each and every one of the SOA services that will be needed. This requirement is addressed (albeit confusingly and verbosely, but that's inevitable with consensus standards) by the WS-Security and Liberty Alliance standards. And those standards are implemented by almost every middle ware vendor's access management products, including Microsoft, Sun, Computer Associates and others.

Trusted implementations are not as robust but the road ahead is at least clear, albeit clumsy and expensive today. The absence of support for strong modularity (ala OSGI) in tools such as Java doesn't help, since changes in low-level dependencies (libraries) can and will invalidate the trust in everything that depends on them. Sun claims to have submitted OpenSSO for Common Criteria accreditation at EAL3 last fall (as I recall), and I heard that Boeing has something similar planned for its proprietary solution. I've not tracked the other vendors as closely but expect they all have similar goals.

Competing trusted implementations is a different matter that may well take years to resolve. Becoming the sole-source vendor of a trusted implementation is every vendor's goal because they can leverage that trust to almost any degree, generally at the buyer's expense. Real bricks are inexpensive because they are available from many vendors that compete for the lowest price.

Open Source Software

In view of the importance of the open source movement in industry, its growing adoption in government, its important to point out why it doesn't appear in the above list of critical changes. What matters to the enterprise is that there be competing trusted implementations of consensus standards, not what business model was used to produce those components.

- Trust implies a degree of encapsulation that open source doesn't provide. Trust seems to imply some kind of "Warranty void if opened" restrictions, at least in every context I've considered.

- The cost and expense of achieving the trusted label (the certification and accreditation process is NOT cheap) seems very hard for the open source business model to support.

- The difference between Microsoft Word (proprietary) and OpenOffice (open source) may loom large to programmers, but not to enterprise decision-makers more focused on whether it will perform all the functions their workers might need.

- Open Source may make more sense for smallest granularity components at the bottom of the hierarchy (mud and clay) that others assemble to make (often proprietary) larger granularity components.

Concrete Recommendations

Enough abstractions. Its time for some concrete suggestions as to how DOD might put them into use in the FEA/DoDAF context.

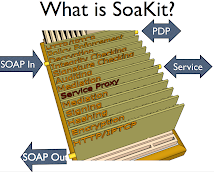

Beware of one-size-fits-all panacea solutions: SOA is great for horizontal integration of houses to build cities so that roads, sewers and power will interoperate But SOA is extremely poor at vertical integration, for composing houses from smaller components such as bricks. Composing SOA services from Java class libraries is mud and wattle construction which is not even as advanced as mud brick construction. One way to see this is in SOA security, for which standards exist as well as (somewhat) trusted implementations. SOA security can be factored into security features (access controls, confidentiality, integrity, non repudiation, mediation, etc) that can be handled either by monolithic solutions like OpenSSO or repackaged as pluggable components as in SoaKit. Yes, the same features can be packaged as SOA services. But nobody would tolerate the cost of parsing SOAP messages as they proceed through multiple SOA-based stages. Lightweight (sub-SOA) integration technologies like OSGI and SoaKit (based on OSGI plus lightweight threads and queues) would be ideal for this role and would add no performance cost at all.

Publish a approved list of competing trusted implementations: This doesn't mean to bless just one and call it done. That is a guaranteed path to proprietary lock-in. Both "trusted" and "competing" must be firm requirements. At the very least, trust must mean components that have passed stringent security and interoperability testing, and competing means more than one vendor's components must have made it through those tests.

Expose the use of government-approved components in FEA/DoDAF: These currently expose only what is to be constructed and its impact on agency performance to stakeholders, leaving how to be decided later. How is a major stakeholder issue that should be decided well before project funding, such as whether components from the government-approved list will be used to meet non-functional requirements such as security and interoperability. As a rule, functional requirements can be met through ad hoc construction techniques. Security and interoperability should never be met that way.

Leverage trusted components in the planning process: The current FEA/DoDAF process imposes laborious (expensive!) requirements that each of hundreds of SOA-based projects must meet. Each of those projects has similar if not identical non-functional requirements, particularly in universal areas like security and interoperability. If trusted components were used to meet those requirements, the cost of elaborating those requirements could be borne once and shared across hundreds of similar projects.

So what?

OMB's mandate to provide better oversight is likely to accomplish exactly that if it doesn't engender too much bottom-up resistance along the way. But to belabor an overworked Titanic analogy, that is like conentrating on auditing the captain's books when the real problem is to stop the ship from sinking.

The president's agenda isn't better oversight. That's someone else's derived goal which might or might not be a means to that end. The president's goal is to improve the performance of government agencies. Insofar as more reliable and cost-effective use of networked computers is a way of doing that, and since hardware is rarely an obstacle these days, the mainline priority is not more oversight but reducing software cost and risk. Better oversight is in a possibly necessary but definitely supporting role.

The best ideas I know for doing that are outlined in this blog. They've been proven by mature industries' millennia of experience against which software's 30-40 years is negligible.

6 comments:

More than just the meaning of components is missing. Far too many people think that an "architect" is the person who architects a building. No, an architect designs the building, or perhaps more properly the general design, look and feel. The verb is "design".

The Government's track record on software projects is worse than terrible. In the 90s, the DoD had less than a 10% success rate with software. I see no evidence that they are getting much better.

On the contrary, the agile software fad has made people think you can build anything in four months. Its getting harder to find people who understand the exponential complexity of large systems.

Keep pushing, Brad, but I'm not seeing much improvement in process, communications, or execution.

Although I agree govt's record is hardly inspiring, I'm actually an optimist and see FEA as a sign of improvement. Problem is, FEA imposes a huge documentation burden on project managers who are now forced to project detailed architectural information up the chain to decision makers, who use this information to decide which projects will get funded. The components-based approach I outlined here can help by reducing the documentation burden (trusted components don't need to be designed, documented and implemented). That leaves a fundamental problem I see no easy solution to. The tangible construction industry uses distributed decision-making by folks in direct contact with each construction site. The government uses central planning, which failed in Russia with such catastrophic results. I don't see ready solutions because digital components are made of bits, which don't abide by the conservation laws that mediate distributed decision making. And the belief in central planning persists in govt circles in spite of its consistently poor results. I have no solution for that.

However I don't see agile as a fad, although I do get tired of all the hype. I see it as the goal to which we're striving, government included. Problem is, agile practices work best when the end-user is actively involved to answer requirements questions. This is almost always impossible in government work, where contractors can only talk with contractual staff, not with actual users. If anyone knows of ways to solve that, I'm all ears.

Agile works well when there is close communication so that requirements can be iterated. That means the customer has to talk directly to the developers, and vice versa. That is impossible in any large scale Federal contract.

If you apply agile in a world where there are many layers of folks on both sides (contractor and agency) then you are doomed.

I've been exploring a concept for a year or so: that its impossible to do large scale commercial software anymore. I was thinking of the commercial space, say a Fannie Mae accounting system developed by IBM, or BearingPoint, etc. I think they are impossible. And they have a much higher chance of working than a typical Federal agency effort.

See Charles Rossotti's book: Many Unhappy Returns, about his failures at the IRS. Charles ran AMS for 25+ years, successfully delivering complex systems to customers. He failed as the Commissioner of the IRS, from the inside.

This is an interesting analysis of trust architectures. The company I work for, Galois, is working on building trusted components. We're using Haskell, and its rich type system to develop one level of trust, (mostly limited by the complexity of a garbage-collected runtime). We're also using Isabelle-HOL (higher order logic) to construct programs whose entire behavior can be formally reasoned about.

We've developed a cross-domain file storage system (based on the WebDAV protocol), called the TSE, which uses Haskell to build "medium assurance" front-ends to a "high assurance" cross-domain component constructed in C, with a formal proof that it enforces a Bell LaPadula information flow policy.

I saw in this month's MacTech that you've dabbled in Haskell -- I'd love an opportunity to exchange stories about our experiences in this realm.

Thanks, Dylan. Actually more than dabbled, but never managed to get it with Haskel. I'm OK with functional but lost it with monads and finally just gave up. Happy to swap stories; just email. Its on the web.

Post a Comment